QUANTIFYING DYNAMISM

IMAGE-PROCESSING AND LUMINANCE ANALYSIS

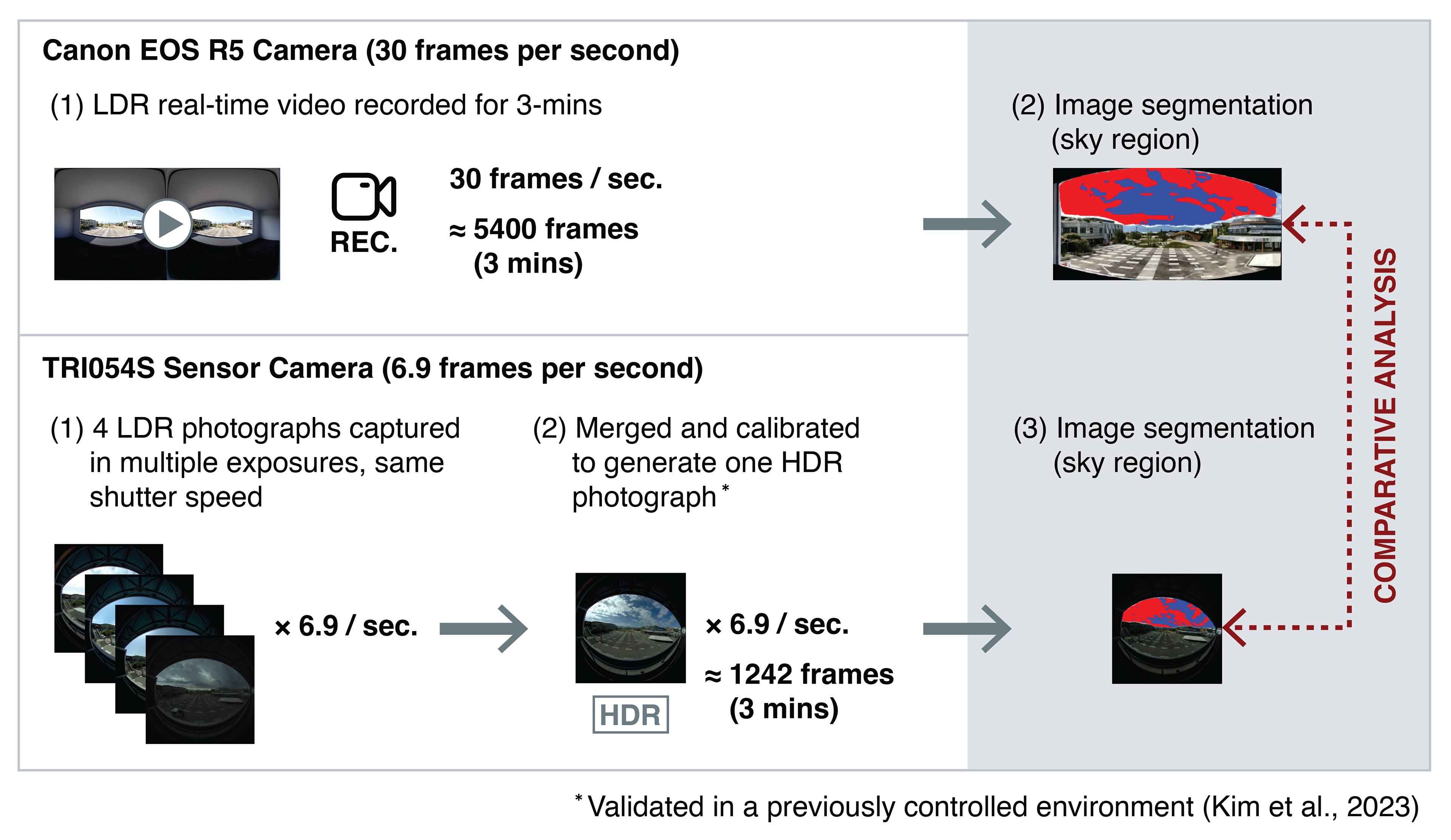

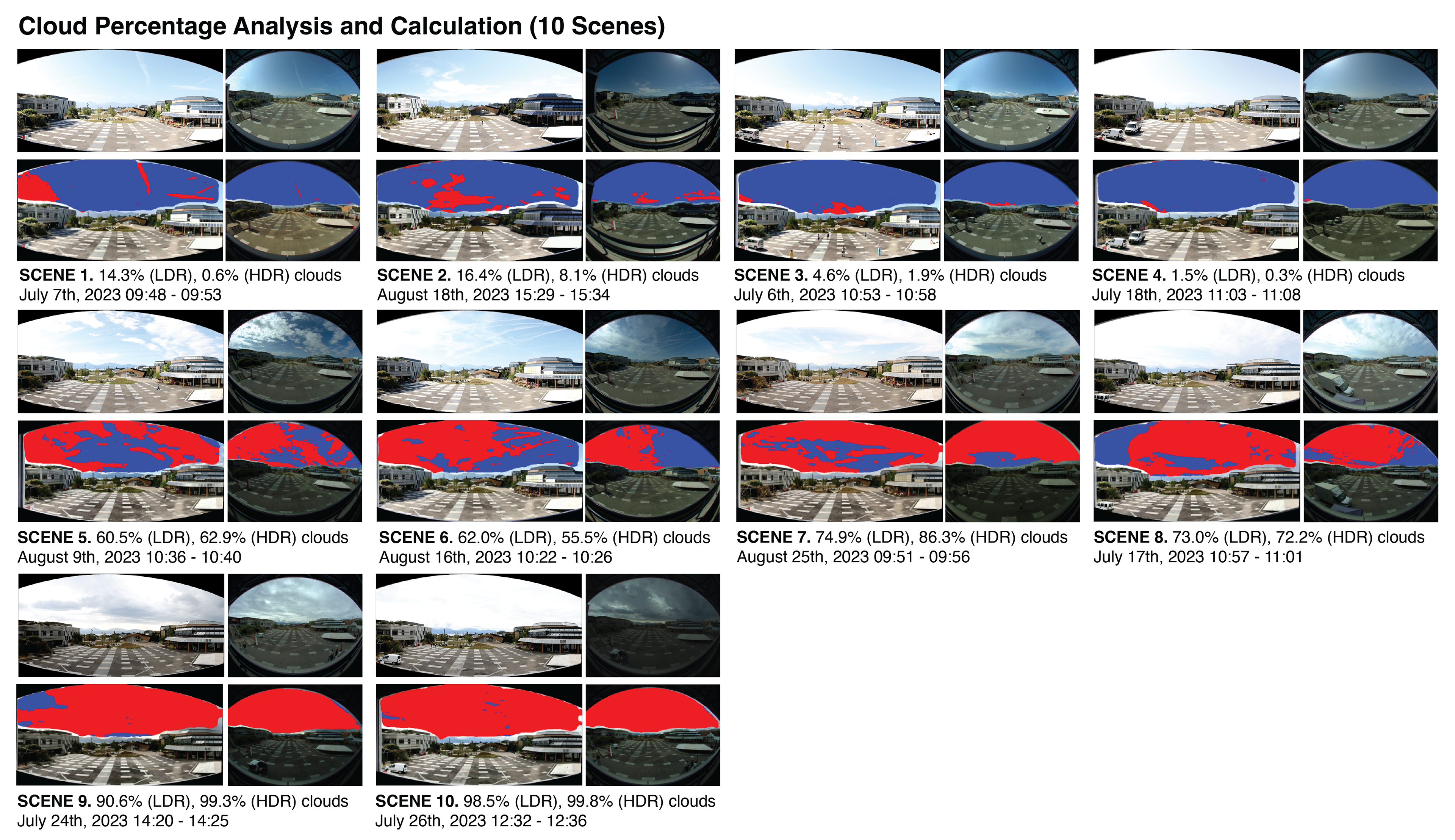

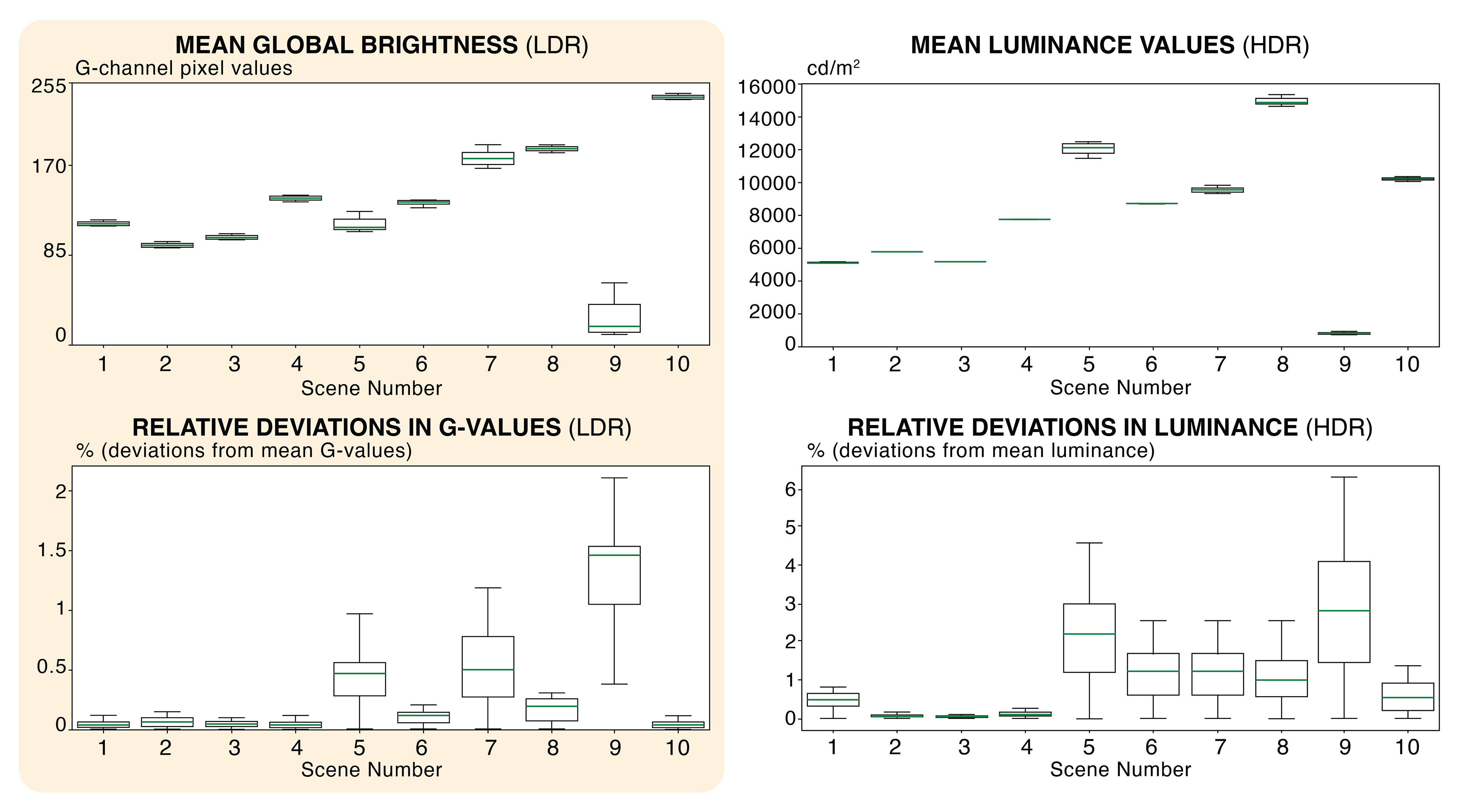

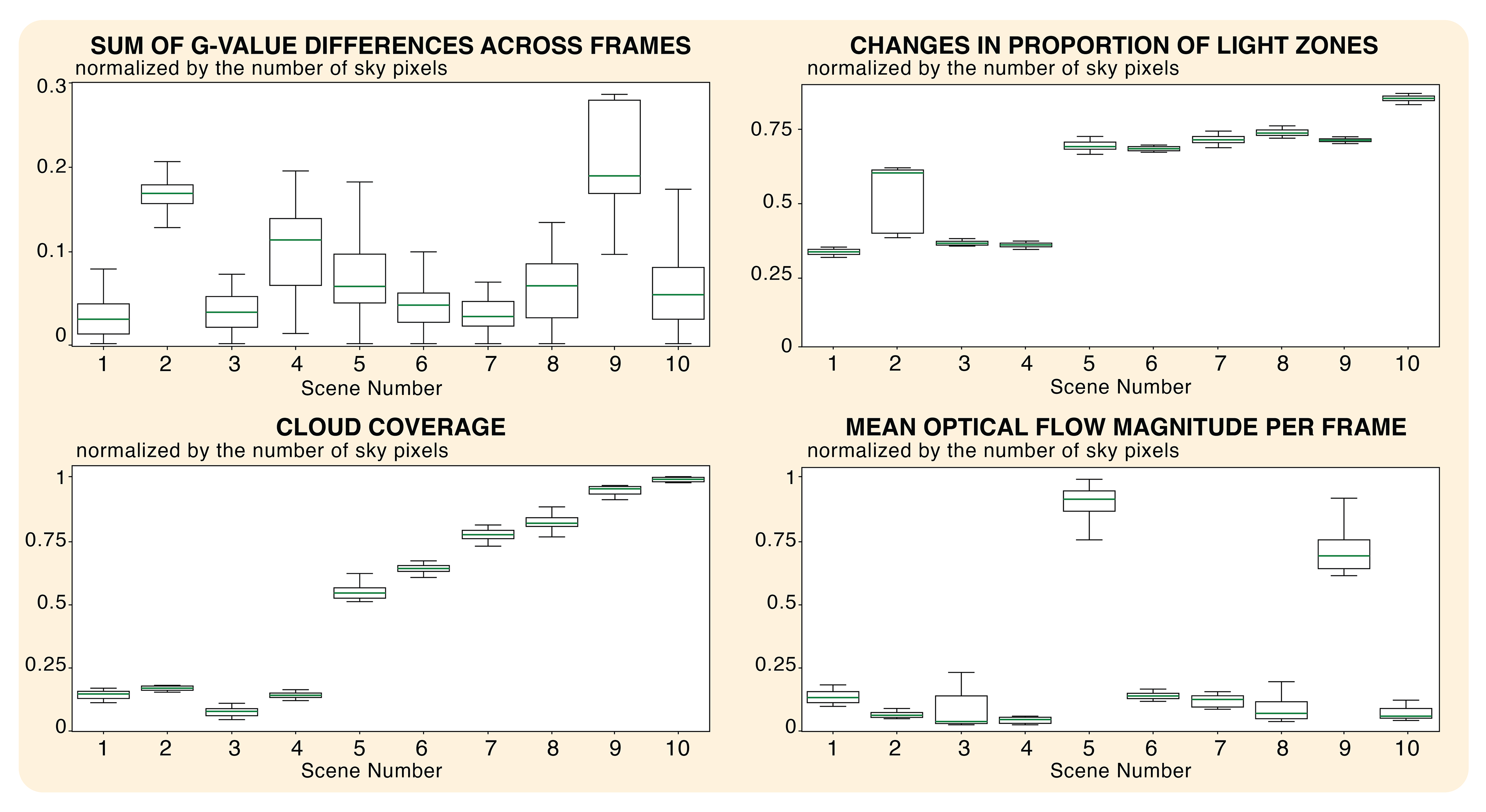

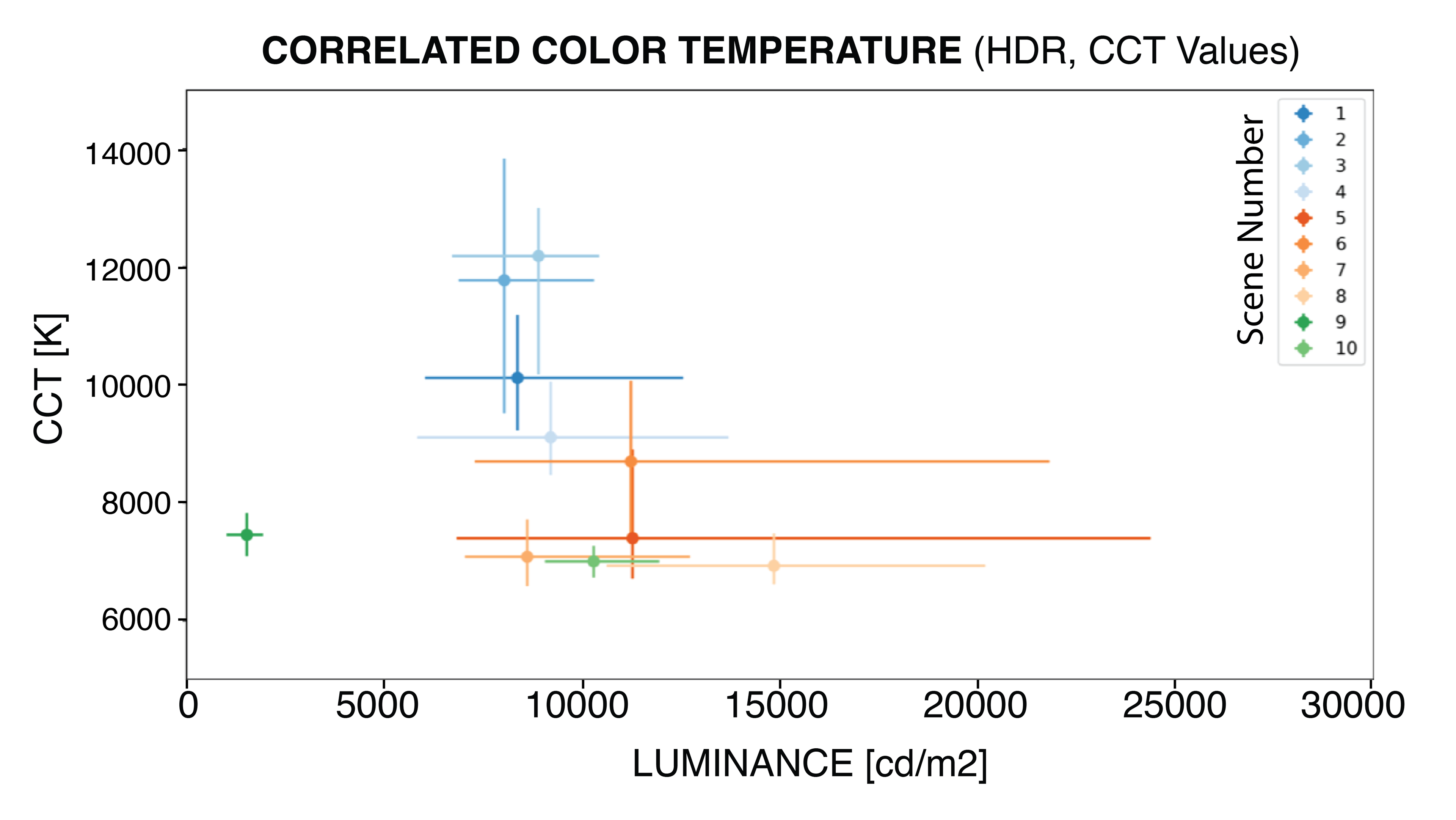

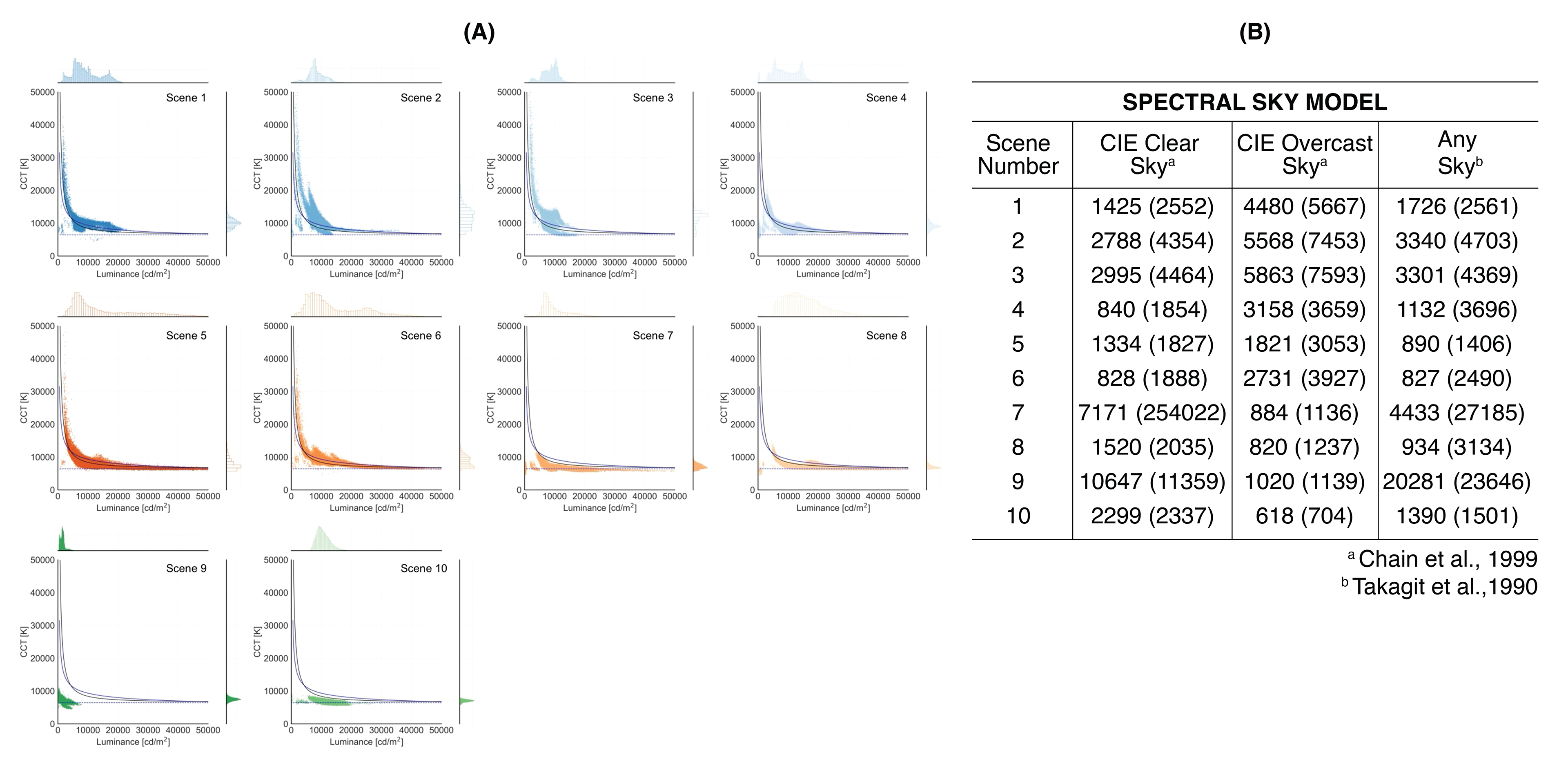

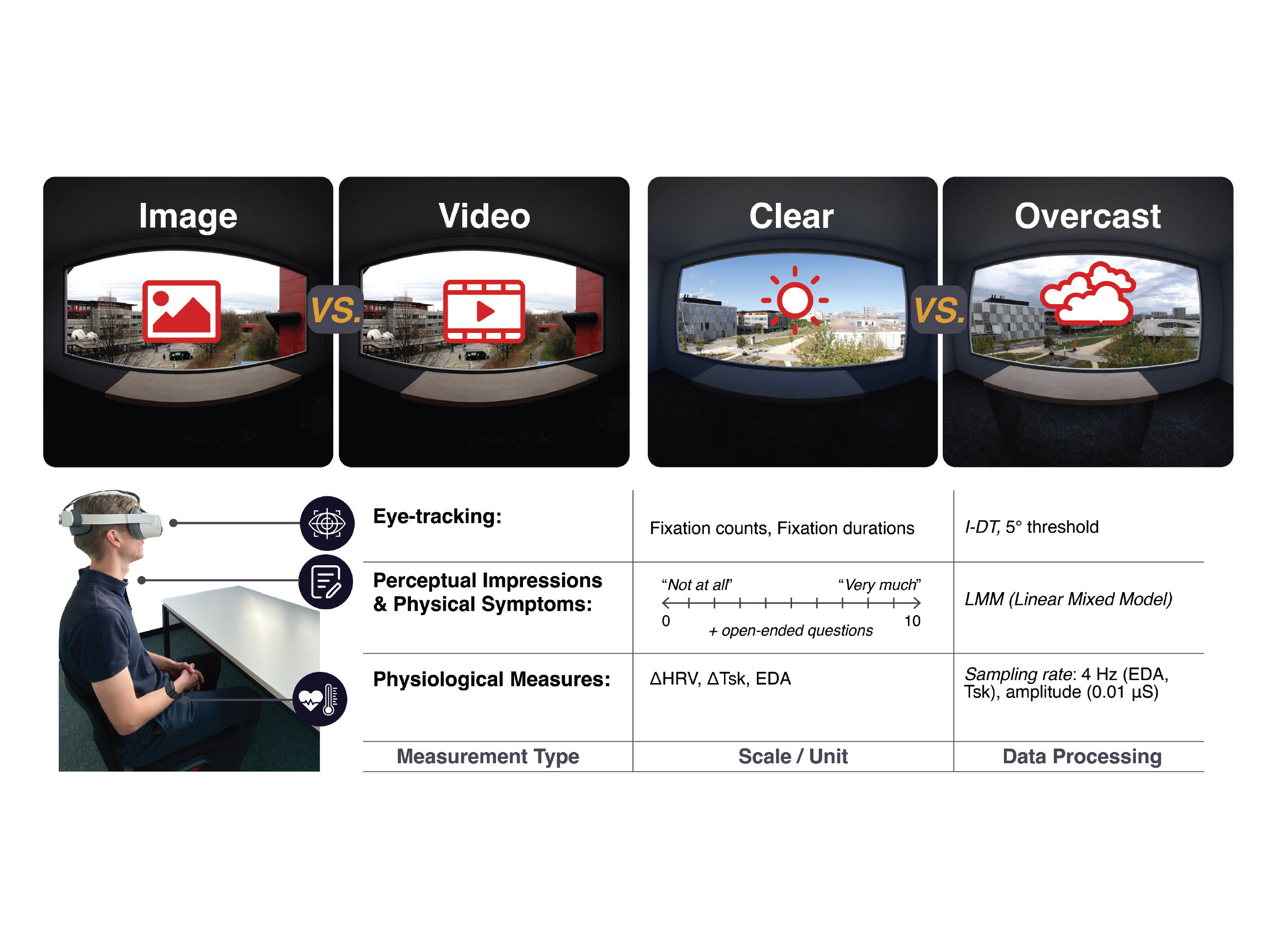

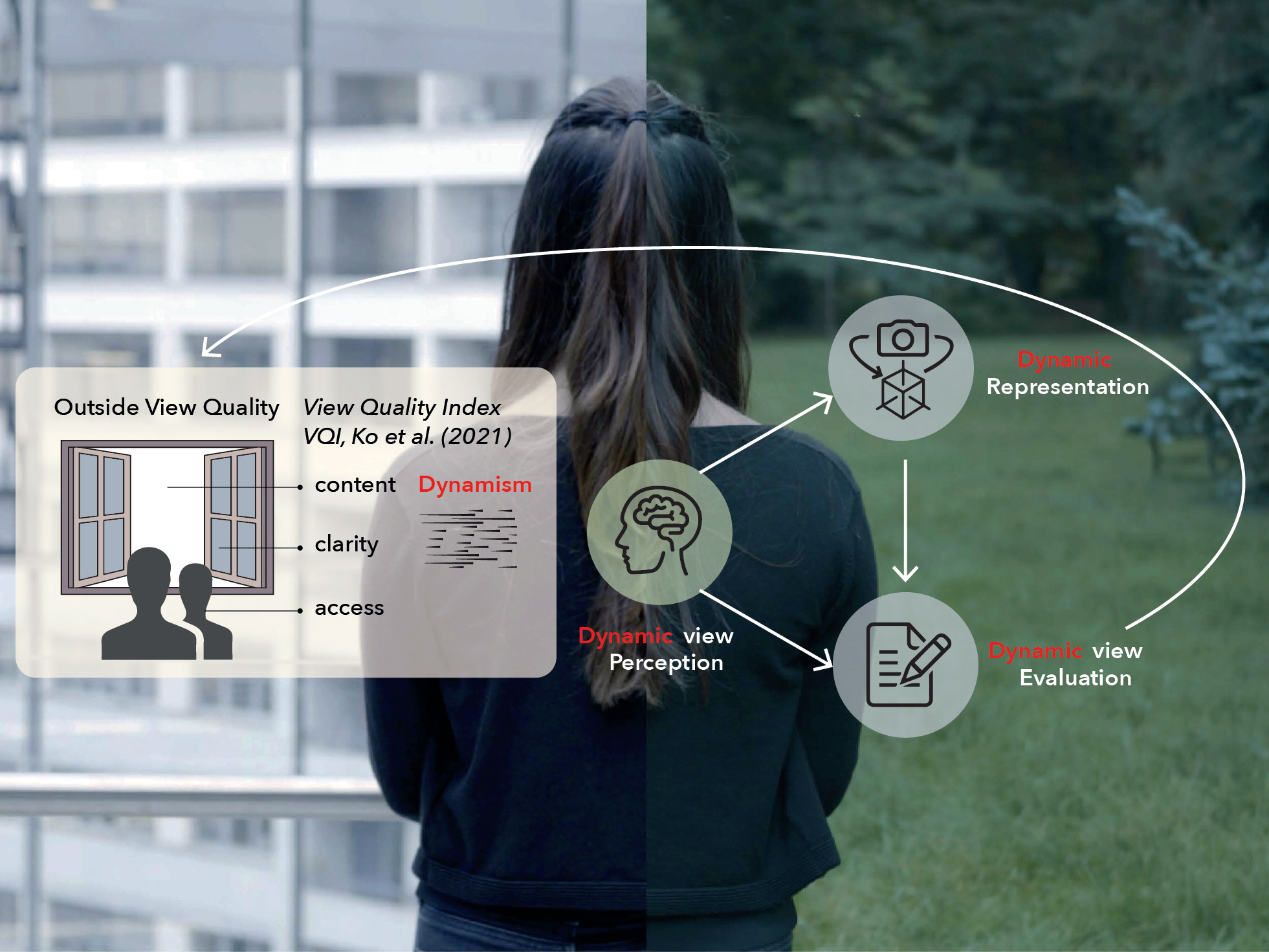

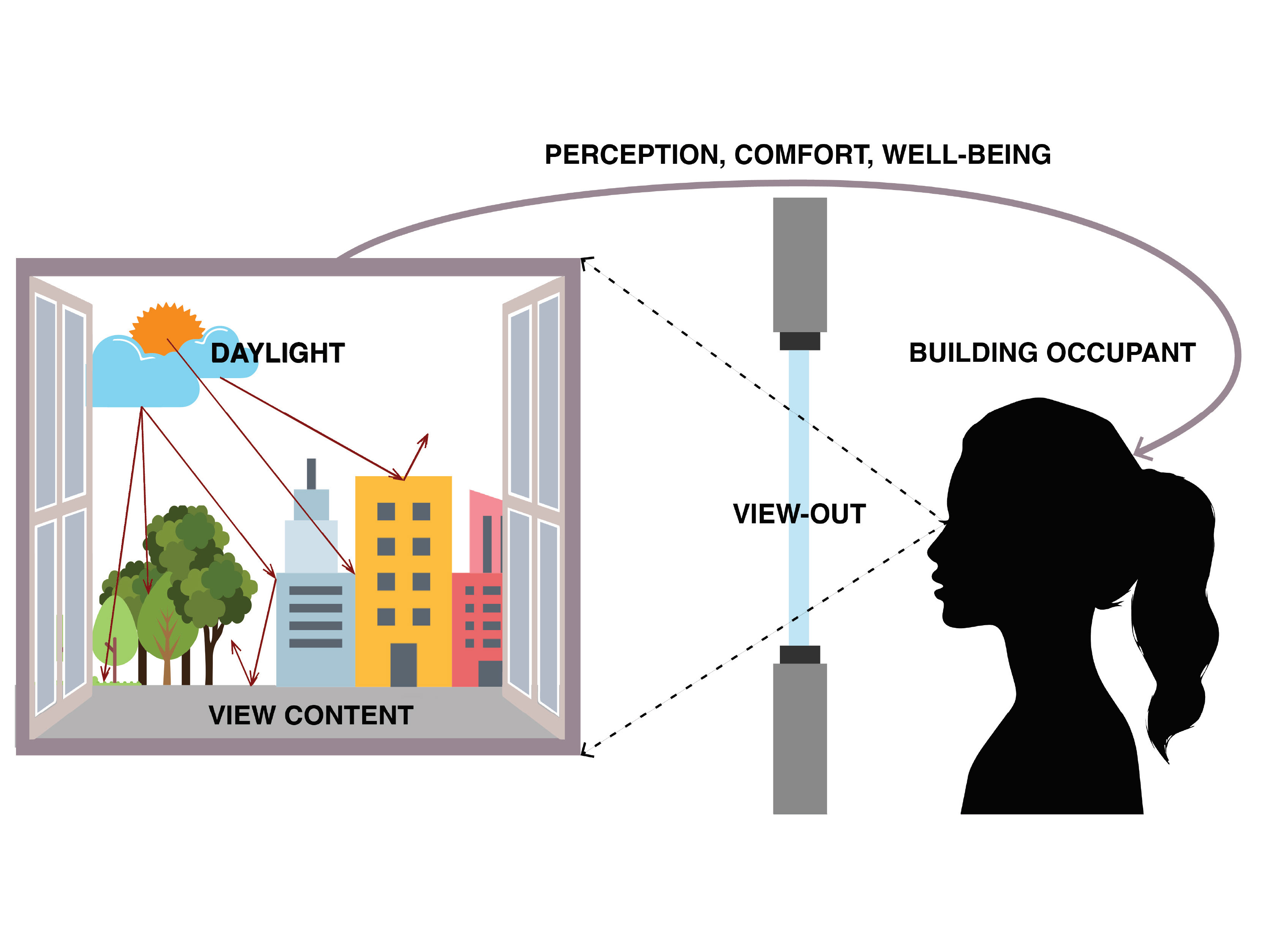

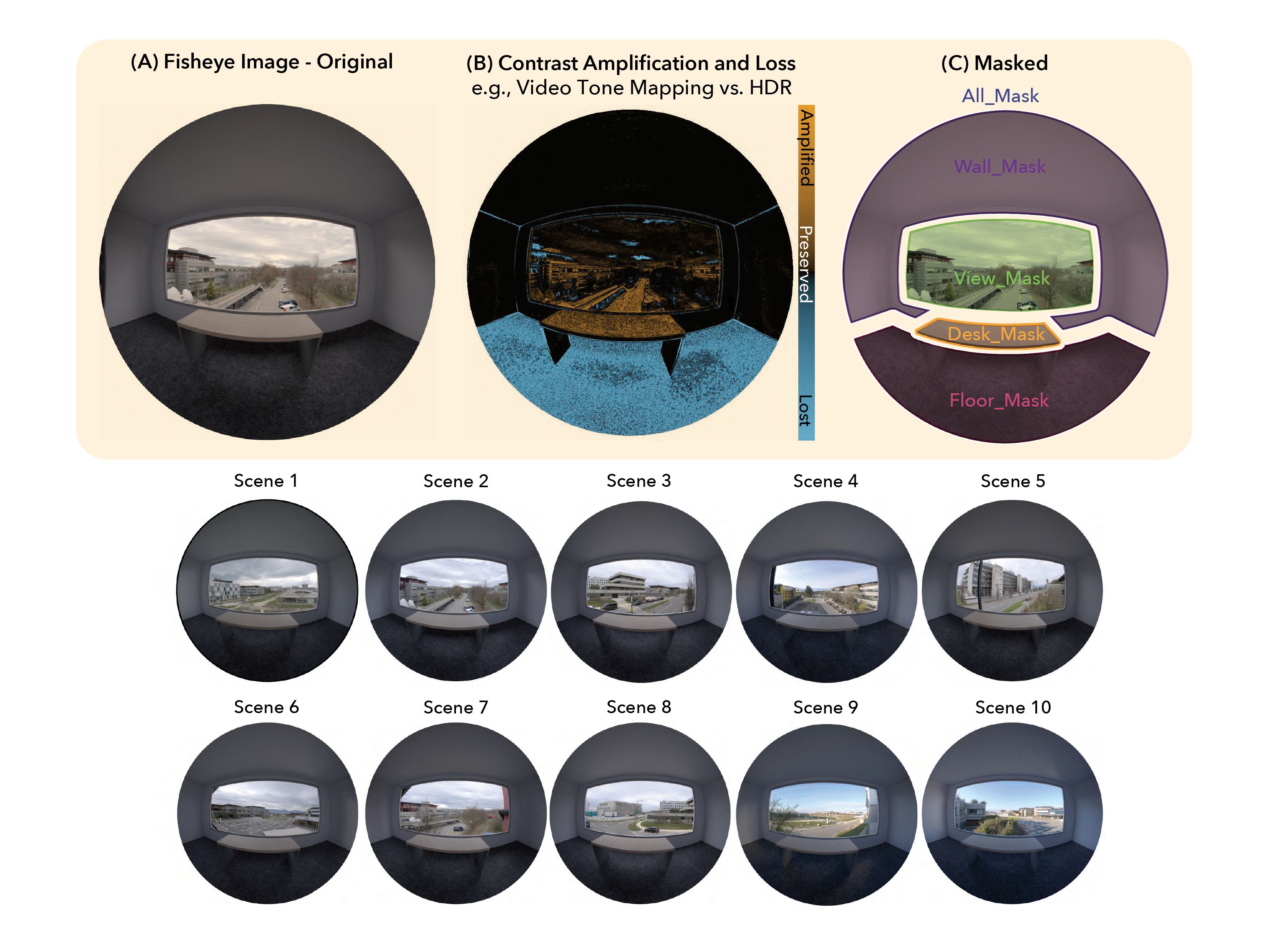

To quantify and characterize temporal variations in daylight and the types and intensities of movement within scenes, we employ advanced image processing and computational techniques. This approach enables us to capture the dynamic components of window views, integrating passive elements such as daylight and sky patterns with active agents including people, animals, and road traffic. Our study introduces a novel image-analysis procedure for examining temporal changes in natural light—an essential factor in daylighting and built-environment research. We combine Low Dynamic Range (LDR) and High Dynamic Range (HDR) camera outputs, exploiting their complementary strengths to record a wide spectrum of sky conditions. This dual approach reveals both overall light distribution patterns and subtle luminous fluctuations, without the need for specialized equipment. A key contribution of our work is the simultaneous use of LDR and HDR imaging to detect fine-grained light variations, supported by deep learning techniques that distinguish sky and cloud regions. We further validate our results against empirical HDR data, ensuring the robustness of the analysis. By providing a practical and cost-effective alternative to hyper-spectral or photosensor-based methods, our framework broadens the accessibility and applicability of daylight research.

2025

Journal Publication

Cho, Poletto, Kim, Karmann, Andersen.

Building and Environment, Volume 269, 112431

Conference proceeding, Peer-Reviewed

Poletto, Cho, Abbet, Thiran, Andersen.

CISBAT 2025 The Built Environment in Transition Hybrid International Scientific Conference,

3 – 5th September; Lausanne, Switzerland

Master Thesis Supervision, EPFL

Poletto, Arnaud

August 19th, 2025